Published:

Updated:

by Wayne Smith

Design and site usability are not ranking factors for search engine optimization; User engagement does not matter until a site ranks in search so user engagement can be determined. User engagement does not become a factor until a person can click on a link; And, click the back button. Navboost is a refinement of the search results that take place outside of the natural order of the ranking system.

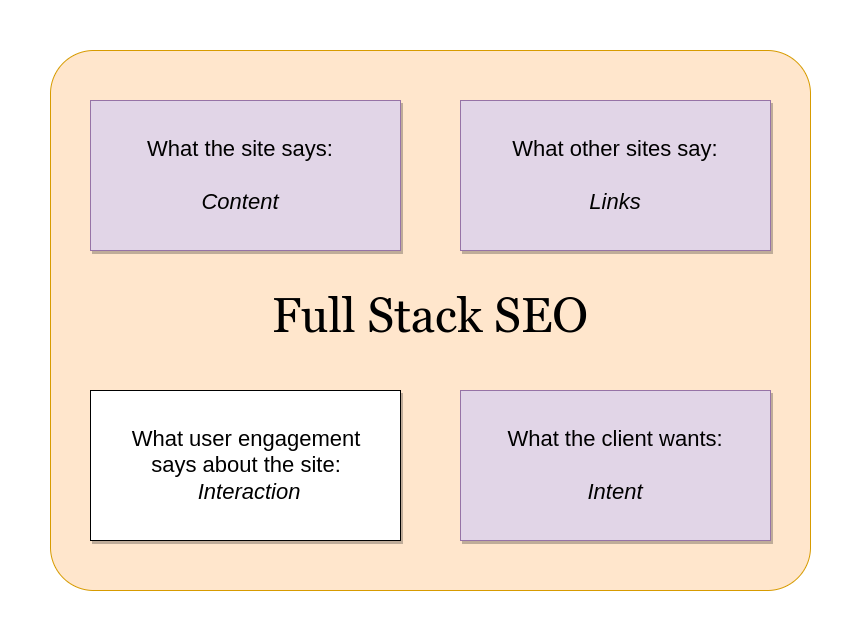

Two of the three pillars of SEO are backlinks and enteractions, or in Google's words; "What the web thinks about the site and what the users think about the site." Natural links, or other webmasters, will not want to link to a badly designed site. Still, other websites can not predict other people's behavior, and it is impossible to please everybody.

The group of algorithms at Google for looking at user engagement is called Navboost.

Dr. Marie Haynes, Marie Haynes Consulting, no affiliation to Solution Smith, advances people's knowledge of Navboost in her podcast, "Navboost - Understanding this system changes how we do SEO." More on Navboost from Dr. Haynes exists at Navboost: part 3 of her series about the attributes in the discovered Google API files.

Search Engine Server Log Data

I looked at the server log data first because it was the log data from running a niche search engine that led me to look at user engagement in the first place. In my case after seeing numerous log entries by the same IP for the same search ... site.com/search?terms=keyword+keyword.

I added site.com/goto?site=url&ref=site.com/search?terms=keyword+keyword which redirected the user and placed the sites they were clicking on in the log file. Yes, anytime you click a link that includes a "?data" after the URL you are anonymously being logged.

My belief that having position #1 was key to SEO success was shattered!

The information then and today was that the first position in search gets the most traffic, I think the data is that 25% of clicks go to position #1? Honestly, I don't pay any attention to that number today; The lion's share is going to the site with the best title and description that matches the search intent, (although the word search intent did not exist at that time). And, the lion's share is not automatically the first site listed. To give the lion's share of traffic to the first site listed requires looking at client interactions.

With the newly aquired wisedom on SEO -- I increased my SEO traffic by over 1000%

Making bank in third place: Being the top dog, or number one, in search means other sites are trying to copy your tactic. Competitors don't give the site in third place any attention, but customers don't care what place you have they care if the title and description match their intent.

If the search term has mixed intent results ... Spots one and two do not affect your traffic if they provide content for a different intent as displayed in their title and description. Third place although not targeted by most SEO providers is often an ideal in-the-money spot ... The first spot CTR is a myth, it is easy to get more traffic than the first spot with a better title and description.

The data is as clear as day

Today Google has far more knowledge on user engagement than I could have ever imagined. The information in the API shows they can keep track of whether the user is logged into Google, using YouTube, or using a Chrome Browser. They stated before Congress they need to use Chrome to ensure the quality of Google search results. And, Javascript can effectively provide hot spots on the results page such as what site the user held the mouse over before he clicked it.

Beyond when a user skips the first listing. The server data shows how long the user was on the site before they returned to the search results and which result was the last one before the user either decided to search for a different or suggested term or was done with the query. In short, it provides everything needed to determine what page satisfies the user.

Empathy for the user

Google acknowledged using interaction data before the DOJ. They said the Chrome browser is important to the quality of their search results.

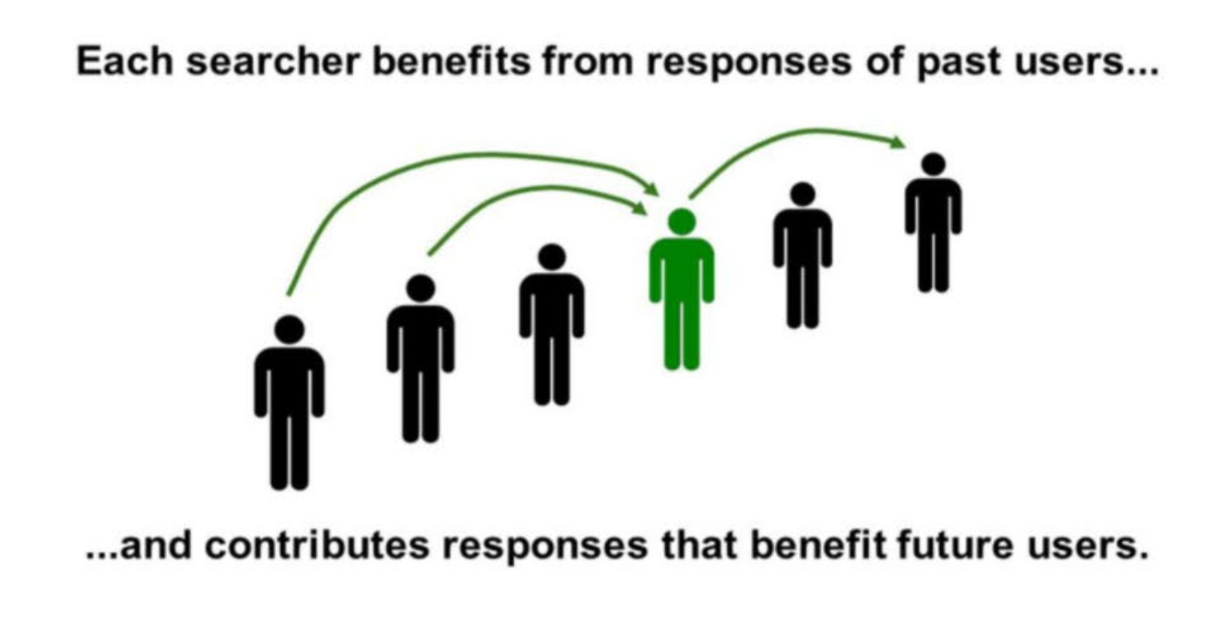

The concept of using how users interact with the search results is not new. In the past, some search engines used user interactions as their primary ranking factor.

One difficulty is many of the interactions may be from bots and not users, which adds noise to the data. If one tries to determine how many possible clients visited their site, they understand the difficulty. Nonetheless, bot traffic can be filtered out.

One way for Google to filter out humans from bots is if the user is logged in; And, then use only data from logged-in browsers.

Refining My Own SERP pages

My searchable index had both a natural database order, and a relevancy refinement:

Both of them could be manually adjusted. I did not use an algorithm, although the relevancy was initially determined algorithmically by giving weight to the text, links, headlines, page description, and title.

The natural order was influenced by Pagerank, which was also used by the DMOZ directory, but mostly the curated sites were ranked in the order I believed were better. The influence of page rank was a tiebreaker.

State of the Art for Scalability

The CPU time to sort an entire database of sites is slow. By putting the database in a presorted order much of the heavy lifting can be done before anybody uses the search; And, when enough results are produced, (40 in my case), the search does not need to go to the bottom of the database ... it can just stop when the query has enough information. The smaller set of 40 results can then be sorted based on relevancy and the top 20 results sent to the browser.

I believe Google considered the 2,000 sites as the stopping point, but don't quote me. The 2,000 number comes from when Google allowed one to look at 200 pages of ten results each. You could never go to the last result in Google or another of the search engines at that time or at this time.

The User Behavior Analytics from Search Engine Logs

As mentioned the first thing I noted when looking at my logs was... People needed to skip over the sites listed first and people would go through several sites before they found the site they were looking for. It would be better to list the sites they wanted first.

Empathy for the User

Moving the sites people preferred up was very simple ... although the natural order was based on size; It was not a commandment in stone that the order shall be ... Just count how many times people clicked on a site and move it up a notch. The core database would then be updated.

So the factors were:

- Size and coverage of the topic

- Pagerank

- Click through rates

- Vertical relevancy

And, factors were subject to change.

In looking at how users found sites in my search ... I'm one of those self-critical people ... I found some sites were buried because they were very small but extremely focused. I twiddled with the database moving those sites that covered their niche up in the database. Certainly, an AI system would have been helpful to twiddle the database, but AI did not exist at that time.

I also had to twiddle with the keyword relevancy because some sites used the word but didn't really have any content for the keyword. There could have been times I twiddled to increase relevancy for non-text content, but I don't recall needing to; And, I was not using image alt text. It would have been necessary to twiddle with the relevancy of flash-based sites, as those were binary, not text-based.

There is no data on how deep into the natural order of the database Navboost operates; And there is no data on how deep into the natural order the AI overviews go but AI overviews may give a clue.

The Impact on Websites

The impact on websites can be seen and is a wake-up call for those who limit their SEO to link building. Sites without engagement or sites where the user views the page and returns to search to select a different result can lose their positions on the SERP.

Website owners will need to adjust their content strategy to consider user engagements and additional twiddlers used to change the ranking of sites in search. Optimizing for click-through rates has been a good practice for a very long time.

Like AI it should be expected these twiddlers go through different algorithms to achieve good results.

... Solution Smith tests SEO tactics so you don't have to ...

Full-stack SEO has a lot of moving parts, and it takes time and effort to understand the nuances involved. Solution Smith takes care of the overhead costs associated with digital marketing resulting in real savings in terms of time and effort.