Published:

Updated:

by Wayne Smith

The canonical is understood by many to be a solution to the problem of duplicate content. If such a promise were ever made, it was short-lived and subject to change. In an ideal world when a search engine found the canonical tag and it pointed to a different URL the page with the wrong canonical tag would simply not get indexed until it was corrected, (assuming it was an error); But, it does not work that way.

The RFC 6596 document concerning canonicalization is clear. It should be included on web pages to identify the correct URL that should be used to access the document. Links should point to the correct canonicalized URL, and search engines should index the canonical.

Using canonicals is the best practice for search engines because it saves the engine from processing duplicate content, which serves no purpose; Or, in some cases leads to the bot trying to index an endless path of dynamically generated content.

Servers can be configured to add a noindex to the http header but using the server to tell bots the non-canonical URLs should not be indexed after it has been requested wastes resources and time.

Google considers the canonical a suggestion. Sure some people may use them incorrectly ... take a situation where one page is blue-widgets.html and another is pink-widgets.html. Technically not duplicates but Google will consider them as duplicates because everything except for one word is the same, and the two pages will cannibalize each other in the SERPs. Google may choose to ignore the canonical tag or choose to use the other page as the canonical.

Why Duplicate Content Matters

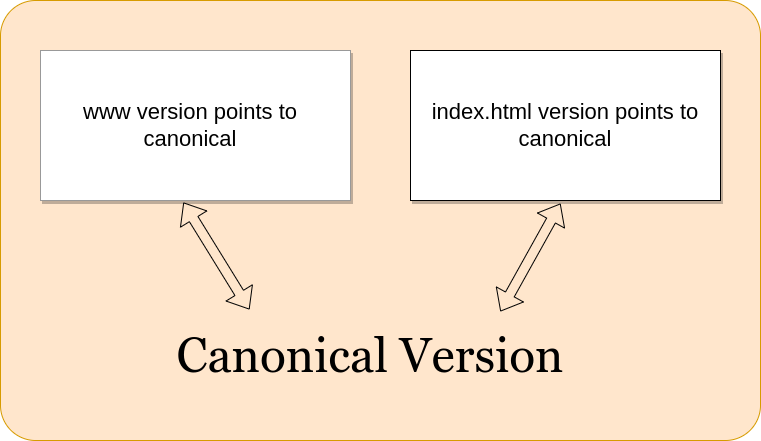

From the user and search engine point of view; Duplicate content floods the search term with pages from the same site. Content that cannibalizes the same search terms also creates this problem. What search engines need to do is remove the duplicate content from the SERP. Some duplicate content exists because ... example.com, www.example.com, example.com/index.htm ... all present the same page.

If the page itself can inform the search engine where it should be found ... the promise of the canonical tag ... search engines don't need to spend time removing duplicate pages.

Having the pages removed because of duplication harms SEO

When pages become indexed and then are removed because of duplication the page never establishes its proper position on the search engine results page. Some of the algorithms used to rank a page are done after the page has appeared in the index. This is both because some of the algorithms evaluate user interactions and the economics of spending resources to evaluate a page that may receive few impressions. If a page is indexed one week, then removed then indexed another week it never has the time to settle into a position.

Statistically, it creates a profile of poor-quality content on the site. It creates problems that come and go like a chronic illness; Can be difficult to detect; And, can flair up under conditions like a Google update.

Ian Wortley, in his post "Canonical Tags Don't Always Work!" -- describes the blue-widget and Pink-widgets problem back in 2015.

One Solution to Google choosing a specific widget canonical other than what you selected is to change your canonical to the canonical Google wants to use ... as all other search engines follow the standard as described in RFC 6596. But if the duplication is between www.example.com and example.com ... robots.txt will need to be used to prevent Google from indexing the wrong page.

... www verse no www ...

A .htaccess delivering different robots.txt file for the wrong domain can be implemented to prevent duplicate content on the domains the site also shows up on.

If the server is not "!" example.com (say it is www.example.com) and the request is for robots.txt then serve disallow.txt instead. Disallow.txt would disallow bots from indexing the entire domain that should not be indexed. If the server name is example.com the normal robots.txt file will be provided.

A 301 redirect can also be done, but there are times when an alternative URL becomes useful for site maintenance. If RFC 6596 canonicalization were followed this would not be necessary.

Maintenance and troubleshooting URLs can use an allow/deny strategy to block the URL from unauthorized IP addresses, But adding another layer of administration adds more areas for human error. Maintenance URLs are not canonical.

When Jumpto "#" or shabang "#!" URLs are Indexed

Web standards are the hashtag "#" is used to jump to a specific part of the webpage. It is not a different page with different content ... yet Google has indexed jumpto links as different URLs from the main page, ignoring the standard and RFC 6596 canonicalization.

The shabang "#!" has been used to allow thin javascript applications to function ... IE (/status#!shipped) can use cookies or localstorage and Ajax JSON request from the client to create a page providing the tracking number for a shipment of an order; A request by Googlebot to /status#!rewards would show Google has earned no rewards; And, a request to /status#!messages would show Google has no private messages.

The shabang was chosen because it allows thin web applications to pass variables to javascript and create a unique page on the browser specific to a single user. The user can exit the page and return at any time in the future. As a shabang should never be indexed; it allows thin applications to operate without configuring the host to block access from bots.

Carolyn Holzman of American Way Media in her podcast Confessions of an SEO, explained the hashtag problem as it relates to canonical and the helpful content update -- Season 4 episode 22: What's Canonical got to do with it. No affiliation but I highly recommend you listen to her syndicated podcast.

While the ? query parameters can be checked on an Apache server ...

... and a noindex can be added by setting an environment variable then if that variable is set add the noindex to the header for the HTTP response. A hashtag is seen as a delimiter and ignored by the host ... information after the hashtag does not appear in the log files ... Apache can not serve a different document based on a hashtag. It will always be the same document.

When Google indexes hashtag content it creates duplicate content. And, then it punishes the site for the duplicate content, which it created. A hashtag can not be used in robots.txt for the obvious reason that it is the same file. The information after the hashtag is available only on the client side.

Confirmation of the problem

Using Google's search console, it is possible in the performance tag to look for page with url contains #. And see clicks, impressions, and average position.

This is empirical evidence of the problem.

Thin applications or the shabang "#!" URLs use case

Thin applications generally have very good user engagement because they are interactive web pages. User's stay on these pages for a long period of time. The user engagement signal or navboost likely protected them from a duplicate content problem.

TOC "#" use case

These page may or may not have good user engagement. Not to suggest user engagement means duplicate content is good ... but, if two pages Google thinks are duplicate both have user engagement, Google may have less confidence in its determination they should be considered duplicate content.

... Solution Smith tests SEO tactics so you don't have to ...

Full-stack SEO has a lot of moving parts, and it takes time and effort to understand the nuances involved. The cost associated with testing are part of Solution Smith overhead ... resulting in real savings in terms of time and effort for clients ... Why reinvent the wheel.