Published

by Wayne Smith

User Intent in Generative Answer Engines Differs from Traditional Search

Before discussing optimization, it’s necessary to understand why users turn to large language models in the first place. Generative answer engines are not used the same way as traditional search engines; they are consulted for synthesis, clarification, and decision support rather than to locate a specific website.

For example, when a user asks for the price of a stock, the immediate intent is data retrieval and interpretation. While this interaction may later lead to interest in a trading platform or portfolio management site, that downstream action is incidental -- not the primary intent of the query. Historically, this type of intent has been described as data mining.

Generative AI systems are designed to satisfy this type of data-oriented intent. When LLMs are applied to collections of documents, users can extract specific information or ask follow-up questions without manually navigating individual pages.

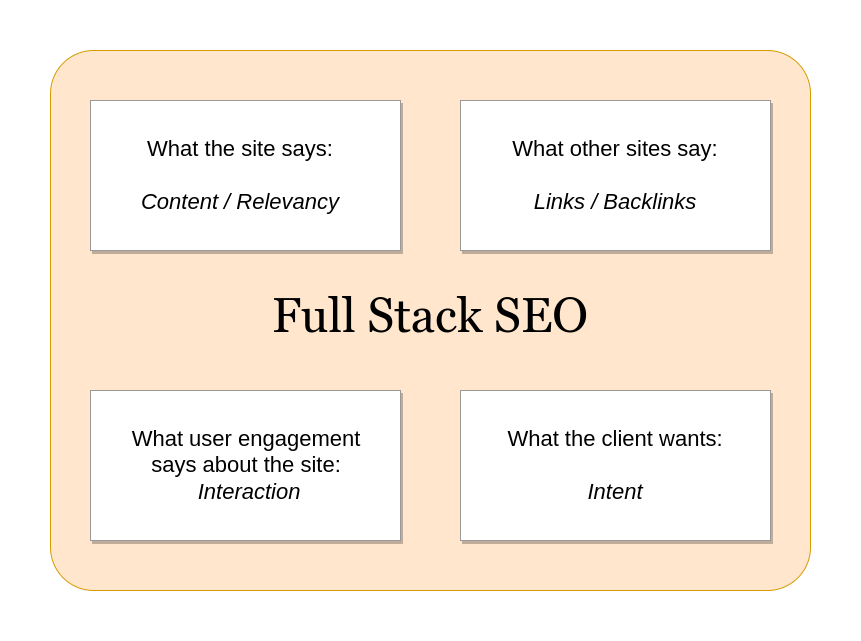

Solution Smith distinguishes between traditional search intent -- locating a specific site -- and the data mining intent fulfilled by LLMs. At the same time, generative systems can improve relevance within document retrieval systems, including web search. To account for this shift, Solution Smith uses the term AI-Aware SEO to describe an evolution of traditional, full-stack SEO.

The Challenges of AI-Aware Full-Stack SEO

- Ensuring relevant information is accessible to LLMs -- brand presence, authority, and distribution

- Earning attribution and citation within generative responses

Once a user’s initial intent is satisfied, the interaction may end as a zero-click search. However, beyond the value of brand awareness, attribution and citation can serve a secondary role by aligning with the user’s intent after an answer has been delivered.

For example, when a user asks about the cost of emergency repairs for a broken water pipe, the immediate intent may be informational, but the follow-on intent is often transactional -- locating a service provider.

From a marketing perspective, this is similar to deciding where to place a brochure for opening an IRA inside a bank. If a customer’s intent is to deposit funds into a checking account and the brochure sits between them and the deposit counter, it becomes an obstacle and is ignored. If the brochure is placed between the deposit counter and the exit, it aligns with the customer’s next likely intent -- and is far more likely to be picked up.

LLM inclusion

The first stage of inclusion is ensuring a page is visible as a result within search. LLMs frequently use search engines as an initial seeding mechanism for information, but they do not stop there. Generative systems expand into related queries and adjacent topics when assembling responses.

As a result, it is not necessary for a site to rank in the top ten results for an exact query in order to be included in LLM-generated answers. Pages that surface for semantically related queries and occupy the same topical knowledge vector may also be incorporated. In many cases, these adjacent queries are less competitive, allowing a site to leapfrog into visibility within a more competitive intent space.

LLM Knowledge Base, (branding): Not all information used by LLMs is derived from live search. Large language models maintain internal knowledge representations based on entities and their relationships. It has been observed that brand mentions -- not just links -- influence both modern SEO systems and the AI layers used by search engines today. Because a brand functions as an entity, questions about that brand may be answered directly from an LLM’s knowledge base without requiring active search retrieval.

LLM Predictive Intent: Part of an AI model’s behavior is predictive in nature. If a query is about a broken pipe, the system may infer that the next likely question relates to repair services or replacement parts. Fan-out queries can be generated from this prediction, or the system may proactively suggest a related follow-up query.

This predictive behavior is a fundamental shift. Inclusion within data-mining workflows and knowledge panels is not determined solely by how many times a site or brand is mentioned, but also by how much observed interest and contextual relevance exists around the information being provided.

LLM content relevance

Apart from mining search engine results, which are influenced by factors such as keyword usage and link signals, LLMs evaluate the informational substance of the content itself. During extraction, generative systems look for semantically related and salient terms that indicate subject-matter understanding and topical coverage. LLMs may account for the authority of the sites they reference, while still surfacing the specific source that provides the answer as a modular data block within the response.

Content that lacks substantive relevance -- regardless of its ranking or popularity for a given query -- is not likely to be incorporated into LLM-generated responses. Pages that fail to demonstrate topical coherence or informational depth may be filtered out during synthesis, even if they perform well in traditional search results.